Martinjmpr

Wiffleball Batter

OK this is a sequel to my previous thread posted here: https://forum.expeditionportal.com/threads/dc-dc-charger-as-an-alternative-to-generator.246276/

I appreciate all the help I got in that thread especially from Dave in AZ

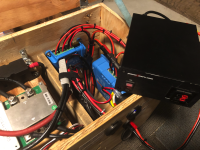

I'm now zeroing in on one specific component of my system, the Renogy 20A DC-DC charger. I put this in almost 5 years ago, to keep a little "power box" charged up. I made the "power box" from a Trolling Motor battery case and a 90AH FLA (wet) battery. https://forum.expeditionportal.com/threads/installing-renogy-dc-dc-charger-in-2018-f-150.214386/

Anyway, I've now upgraded that FLA to a 100AH LiFePo battery. But I seem to be having issues with the charger.

The LiFePo battery has a very cool Bluetooth system that displays battery statistics (with the old one I had to "guess" the charge level based on the voltage.)

First time I hooked up the DC-DC charger to the battery I got this as the display:

Notice the Current display (top row center): 20.35A going into the battery. I was stoked! My system was working and working great! This was at 16:21.

But just 7 minutes later, THIS was the display:

Current showing as "zero." Keep in mind I have changed absolutely NOTHING in the 7 minutes, I think I may have cycled the ignition on the truck on and off, but that's it.

The DC-DC charger is working (green pilot light on.) At this point DIP switch SW5 was in the "on" position (for a Lead-Acid battery.)

The next thing I did was switch the SW5 switch to "off" which, per the manual, is the proper setting for a LiFePo battery.

No luck. I have to emphasize that the DC-DC charger appears to be working (that is, it powers on.) There are no fault codes (which would be indicated by a red light on the charger.)

Before anyone asks, yes the engine was running.

Just to double check, I measured voltage going in and coming out of the charger.

Voltage going in was 14.45

But voltage going out was only 12.80.

I consulted the manual and set the DIP switches for a LiFePo battery charging at 14.4v. Settings were:

SW1 ON

SW2 ON

SW3 OFF

SW4 ON

SW5 OFF

Pulled up the app again and still no charging to the battery:

Note that this was after about 45 minutes of driving around with the battery connected to the charger.

So I'm kind of at my wit's end here and I'd like to get any input from someone who might have an idea what is going on?

Several possibilities have occurred to me:

1. Maybe the battery IS charging but the bluetooth BMS isn't picking it up. That doesn't seem likely since the measured voltage at the output of the charger is only 12.80 and the fully charged battery is at a voltage of over 13v per the BMS.

2. Maybe there is some kind of "sensing logic" in the DC-DC converter that is telling the converter "this battery is over 13v therefore charging is not needed" and the charging function is turned off. I've ready the (awful, really horrible) manual front to back and if this happens it doesn't say so anywhere I can find. Also this doesn't explain why, when I FIRST hooked it up and the battery was at 96%, it DID show it charging at 20.35A.

3. Maybe the charger is just defective? It has been sitting in the back of the truck since early 2020. It's been bounced around (although it sits under the seat and there is a piece of carpet underneath it that provides a little padding.) Perhaps it got damaged somehow? The only way to know this for sure, I think, would be to swap in a "known good" charger and see if there is any different result

As I said in the other thread, I'm pretty sure I have the DIP switches in the correct setting and there are literally no other settings on the charger that I'm aware of.

Any other thoughts on what might be causing this? As of right now it's looking like a defective charger but I can't help but wonder if there is something else going on. I'm specifically wondering why the charger is only putting out 12.8v when there are 14.4 going in.

In the previous thread it was mentioned that the 8AWG cable connecting the DC-DC charger to the battery was inadequate and I should have used 6AWG. I understand that but at least initially I WAS getting 20.35A to the battery, so I'm not sure this is it.

Final question, am I right in thinking that once the battery voltage (on the power box) drops below 12.8v, it should start charging if there are 12.8v going in?

Anyway, I'm trying to get this figured out since we leave in a week for another long trip. Fortunately because it's cold this is not going to be very taxing on the refrigerator that is powered by the battery box and we will have electric hookups so if needed I can charge the box from a 120v AC power source.

Thanks for any input!

I appreciate all the help I got in that thread especially from Dave in AZ

I'm now zeroing in on one specific component of my system, the Renogy 20A DC-DC charger. I put this in almost 5 years ago, to keep a little "power box" charged up. I made the "power box" from a Trolling Motor battery case and a 90AH FLA (wet) battery. https://forum.expeditionportal.com/threads/installing-renogy-dc-dc-charger-in-2018-f-150.214386/

Anyway, I've now upgraded that FLA to a 100AH LiFePo battery. But I seem to be having issues with the charger.

The LiFePo battery has a very cool Bluetooth system that displays battery statistics (with the old one I had to "guess" the charge level based on the voltage.)

First time I hooked up the DC-DC charger to the battery I got this as the display:

Notice the Current display (top row center): 20.35A going into the battery. I was stoked! My system was working and working great! This was at 16:21.

But just 7 minutes later, THIS was the display:

Current showing as "zero." Keep in mind I have changed absolutely NOTHING in the 7 minutes, I think I may have cycled the ignition on the truck on and off, but that's it.

The DC-DC charger is working (green pilot light on.) At this point DIP switch SW5 was in the "on" position (for a Lead-Acid battery.)

The next thing I did was switch the SW5 switch to "off" which, per the manual, is the proper setting for a LiFePo battery.

No luck. I have to emphasize that the DC-DC charger appears to be working (that is, it powers on.) There are no fault codes (which would be indicated by a red light on the charger.)

Before anyone asks, yes the engine was running.

Just to double check, I measured voltage going in and coming out of the charger.

Voltage going in was 14.45

But voltage going out was only 12.80.

I consulted the manual and set the DIP switches for a LiFePo battery charging at 14.4v. Settings were:

SW1 ON

SW2 ON

SW3 OFF

SW4 ON

SW5 OFF

Pulled up the app again and still no charging to the battery:

Note that this was after about 45 minutes of driving around with the battery connected to the charger.

So I'm kind of at my wit's end here and I'd like to get any input from someone who might have an idea what is going on?

Several possibilities have occurred to me:

1. Maybe the battery IS charging but the bluetooth BMS isn't picking it up. That doesn't seem likely since the measured voltage at the output of the charger is only 12.80 and the fully charged battery is at a voltage of over 13v per the BMS.

2. Maybe there is some kind of "sensing logic" in the DC-DC converter that is telling the converter "this battery is over 13v therefore charging is not needed" and the charging function is turned off. I've ready the (awful, really horrible) manual front to back and if this happens it doesn't say so anywhere I can find. Also this doesn't explain why, when I FIRST hooked it up and the battery was at 96%, it DID show it charging at 20.35A.

3. Maybe the charger is just defective? It has been sitting in the back of the truck since early 2020. It's been bounced around (although it sits under the seat and there is a piece of carpet underneath it that provides a little padding.) Perhaps it got damaged somehow? The only way to know this for sure, I think, would be to swap in a "known good" charger and see if there is any different result

As I said in the other thread, I'm pretty sure I have the DIP switches in the correct setting and there are literally no other settings on the charger that I'm aware of.

Any other thoughts on what might be causing this? As of right now it's looking like a defective charger but I can't help but wonder if there is something else going on. I'm specifically wondering why the charger is only putting out 12.8v when there are 14.4 going in.

In the previous thread it was mentioned that the 8AWG cable connecting the DC-DC charger to the battery was inadequate and I should have used 6AWG. I understand that but at least initially I WAS getting 20.35A to the battery, so I'm not sure this is it.

Final question, am I right in thinking that once the battery voltage (on the power box) drops below 12.8v, it should start charging if there are 12.8v going in?

Anyway, I'm trying to get this figured out since we leave in a week for another long trip. Fortunately because it's cold this is not going to be very taxing on the refrigerator that is powered by the battery box and we will have electric hookups so if needed I can charge the box from a 120v AC power source.

Thanks for any input!